Introduction

A-Painter was the first demo we made using A-Frame. Released over a year ago, it remains one of the most popular WebVR experiences at meetups and exhibitions.

We wanted to show that the browser can deliver native-like VR experiences and push the limits of what A-Frame could do at the time. We’ve seen A-Painter used more and more by professional artists and programmers who test its limits and extend its capabilities. Performance is the first bottleneck people hit with moderately complex drawings due to the increased number of strokes and geometry. In collaborative drawing experiences, performance degrades even faster since multiple users add geometry simultaneously.

Recently, we had some bandwidth and rolled up our sleeves to implement some of the optimization ideas we had collected (Issue #222, PR #241).

Draw calls simplified

There can be several causes of bad performance in your graphics application, but looking at the number of draw calls is a good starting point for an investigation.

A draw call is, as its name indicates, a call to a drawing function on the graphics API with the geometry and material properties we want to render. In WebGL, it could be a call to gl.drawElements or gl.drawArrays. These calls are expensive, so we want to keep them as low as possible.

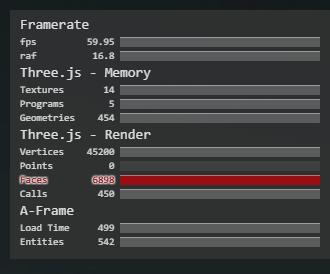

We will use the Balrog scene by @feiss to measure the impact of the optimizations I'm proposing here. The following image shows the statistics when rendering this scene on my computer (Windows i7 GTX1080):

We should identify which numbers affect our performance:

- 14 textures: Every brush that needs a material (lines and stamps) creates its own texture. Instead, they could reuse an atlas with all the textures.

- 542 entities: We created one entity per stroke.

- 454 geometries: One entity per stroke also means one

Object3D,Mesh,BufferGeometry, and Material per stroke. - 450 calls: The number of draw calls we would like to optimize.

To reduce the number of draw calls we should:

- Reduce the number of textures.

- Reduce the number of materials (same reason).

- Reduce the number of geometries by merging all the meshes we can into a bigger mesh, so we can use just one draw call to paint multiple geometries.

In the following sections, we will go through these steps, explaining how we can apply them to our application.

Materials

Atlas

The first step is to reduce the number of materials created, as switching from one material to another will cause a new draw call.

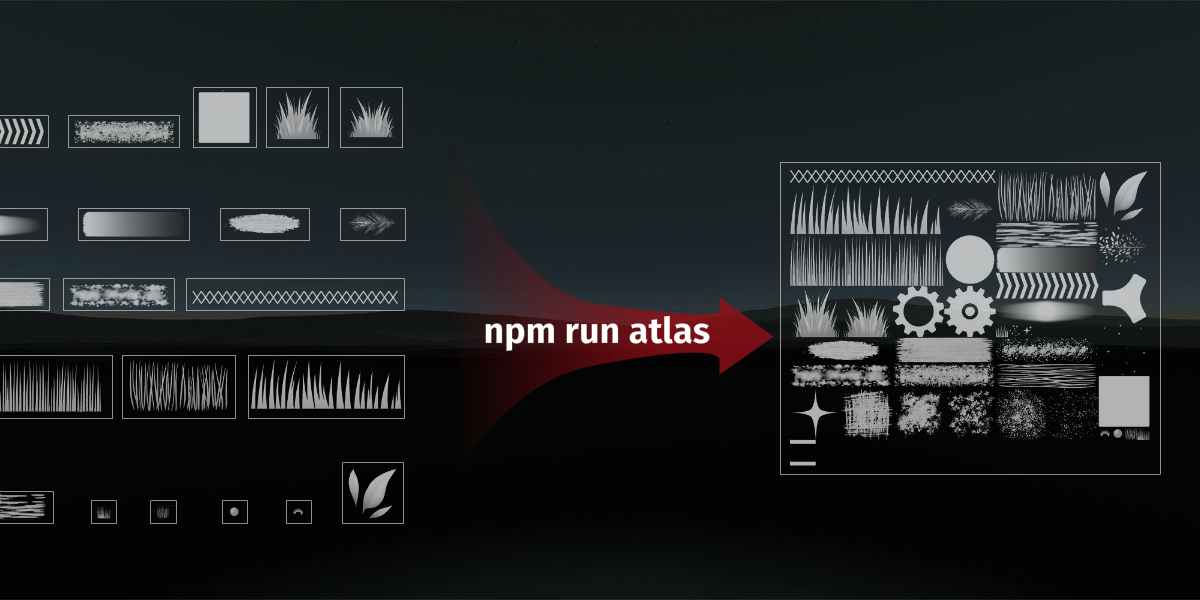

We will start creating a new atlas containing all the textures for each brush, using the spritesheet.js tool, adding a new npm command called atlas using spritesheet-js to pack them:

"atlas": "spritesheet-js --name brush_atlas --path assets/images brushes/*.png"

If we now execute npm run atlas, it will create a PNG image with all our brush textures and a JSON file with the information needed to locate them inside the atlas.

The generated JSON is very easy to parse and includes the size of the generated atlas (meta.size) and the list of included images with their positions on the atlas (frames).

{

"meta": {

"image": "brush_atlas.png",

"size": {"w":3584,"h":2944},

"scale": "1"

},

"frames": {

"stamp_grass.png":

{

"frame": {"x":0,"y":128,"w":1536,"h":512},

"rotated": false,

"trimmed": false,

"spriteSourceSize": {"x":0,"y":0,"w":1536,"h":512},

"sourceSize": {"w":1536,"h":512}

},

"lines4.png":

{

"frame": {"x":0,"y":0,"w":2048,"h":128},

"rotated": false,

"trimmed": false,

"spriteSourceSize": {"x":0,"y":0,"w":2048,"h":128},

"sourceSize": {"w":2048,"h":128}

},

"stamp_fur2.png":

{

"frame": {"x":0,"y":640,"w":1536,"h":512},

"rotated": false,

"trimmed": false,

"spriteSourceSize": {"x":0,"y":0,"w":1536,"h":512},

"sourceSize": {"w":1536,"h":512}

},Now we need a simple way to convert the local UV coordinates to the new coordinates inside the atlas space. To ease this task, we will create a helper class called Atlas that will parse the generated JSON and provide two functions to convert our UV coordinates.

function Atlas () {

this.map = new THREE.TextureLoader().load('assets/images/' + AtlasJSON.meta.image);

}

Atlas.prototype = {

getUVConverters (filename) {

if (filename) {

filename = filename.replace('brushes/', '');

return {

convertU (u) {

var totalSize = AtlasJSON.meta.size;

var data = AtlasJSON.frames[filename];

if (u > 1 || u < 0) {

u = 0;

}

return data.frame.x / totalSize.w + u * data.frame.w / totalSize.w;

},

convertV (v) {

var totalSize = AtlasJSON.meta.size;

var data = AtlasJSON.frames[filename];

if (v > 1 || v < 0) {

v = 0;

}

return 1 - (data.frame.y / totalSize.h + v * data.frame.h / totalSize.h);

}

};

} else {

return {

convertU (u) { return u; },

convertV (v) { return v; }

};

}

}

};Using this helper, we can easily convert the following code:

material.map = “lines1.png”;

uv[0].set(0, 0);

uv[1].set(1, 1);Into this:

material.map = “atlas.png”;

converter = atlas.getUVConverters(“lines1.png”);

uvs[0].set( converter.convertU(0), converter.convertV(0) );

uvs[1].set( converter.convertU(1), converter.convertV(1) );Thanks to the atlas technique, the number of textures in our app is reduced from 30 to 1.

Vertex colors

But we still have a problem: each stroke has a material and a custom value for material.color, making it impossible to share the same material across all the strokes.

Fortunately, reusing the same material when just changing the color has a very simple solution: vertex colors.

We can define a specific color for each vertex of the geometry with vertex colors, which will be multiplied by the material color that is applied to the whole geometry. Since this value will be pure white (1, 1, 1), vertex colors will remain unaltered. (We could use any color other than white to tint the vertex colors).

In order to do that, we should set up the material to use vertex colors...

mainMaterial.vertexColors = THREE.VertexColors;...and create a new color buffer attribute for our mesh:

var colors = new Float32Array(this.maxBufferSize * 3);

geometry.addAttribute('color', new THREE.BufferAttribute(colors, 3).setDynamic(true));

// Set everything red

var color = [1, 0 ,0];

for (var i=0;i < numVertices; i++) {

colors[3 * i] = color[0];

colors[3 * i + 1] = color[1];

colors[3 * i + 2] = color[2];

}

geometry.attributes.uv.needsUpdate = true;Thus, the number of materials is reduced from the number of individual strokes (hundreds in one painting) to just four: two physically-based render materials (THREE.MeshStandardMaterial) and two THREE.MeshBasicMaterial (one textured and one for solid colors).

Reducing the number of A-Frame entities

Initially, every stroke generated a new entity, and each entity contains a mesh that is rendered separately in its own draw call. Our goal is to reduce these entities and meshes by merging all the strokes into one big mesh that can be rendered with just one draw call.

We were creating one entity for each stroke, appending it to an <a-entity class=”a-drawing”> root entity:

// Get the root entity

var stroke = brushSystem.startNewStroke();

// Get the root entity

var drawing = document.querySelector('.a-drawing');

// Create a new entity for the current stroke

var entity = document.createElement('a-entity');

entity.className = "a-stroke";

drawing.appendChild(entity);

entity.setObject3D('mesh', stroke.object3D);

stroke.entity = entity;We can remove the per-stroke entity overhead by adding the new stroke mesh directly to the root entity’s Object3D:

var stroke = brushSystem.startNewStroke();

// Create a new entity for the current stroke

drawing.object3D.add(stroke.object3D);and we would need to modify some pieces of code, like the “undo” functionality, to just remove the Object3D instead of the entity. Although this is just a temporary step before we can get rid of these Object3Ds per stroke by merging all of them and save many draw calls by sharing BufferGeometrys.

Shared BufferGeometry

We have already reduced the number of textures, materials, and entities, but we still have an Object -> Mesh -> BufferGeometry per stroke, so we haven’t yet reduced the number of draw calls.

Ideally, it would be better to have a very big BufferGeometry and keep adding vertices to it on each stroke, and just send it to the GPU, saving plenty of draw calls.

For this purpose, we will create a class called SharedBufferGeometry that will be instantiated by just passing the type of material we want to use.

function SharedBufferGeometry (material) {

this.material = material;

this.maxBufferSize = 1000000; // an arbitrary high enough number of vertices

this.geometries = [];

this.currentGeometry = null;

this.addBuffer();

}addBuffer will create a Mesh with a BufferGeometry with the needed attributes, and it will add it to the list of meshes the root Object3D has.

It will contain some helper functions so we don’t need to care about indices or buffer overflows, as it should be handled automatically.

addVertex: function (x, y, z) {

var buffer = this.currentGeometry.attributes.position;

if (this.idx.position === buffer.count) {

this.addBuffer(true);

buffer = this.currentGeometry.attributes.position;

}

buffer.setXYZ(this.idx.position++, x, y, z);

},

addColor: function (r, g, b) {

this.currentGeometry.attributes.color.setXYZ(this.idx.color++, r, g, b);

},

addNormal: function (x, y, z) {

this.currentGeometry.attributes.normal.setXYZ(this.idx.normal++, x, y, z);

},

addUV: function (u, v) {

this.currentGeometry.attributes.uv.setXY(this.idx.uv++, u, v);

},Actually, we’ll need more than one BufferGeometry because some brushes don’t have textures, so they don’t need UV attributes, and/or are not affected by lighting, so they don't need a normal attribute either.

We'll create SharedBufferGeometryManager to handle all these buffers:

function SharedBufferGeometryManager () {

this.sharedBuffers = {};

}

SharedBufferGeometryManager.prototype = {

addSharedBuffer: function (name, material) {

var bufferGeometry = new SharedBufferGeometry(material);

this.sharedBuffers[name] = bufferGeometry;

},

getSharedBuffer: function (name) {

return this.sharedBuffers[name];

}

};We will initialize the buffers by calling addSharedBuffer with the different types of materials we’ll be using:

sharedBufferGeometryManager.addSharedBuffer(‘unlit’, unlitMaterial);

sharedBufferGeometryManager.addSharedBuffer(‘unlitTextured’, unlitTexturedMaterial);

sharedBufferGeometryManager.addSharedBuffer(‘PBR’, pbrMaterial);

sharedBufferGeometryManager.addSharedBuffer(PBRTextured’, pbrTexturedMaterial);And we will be ready to use them:

var sharedBuffer = sharedBufferGeometryManager.get(‘PBR’);

sharedBuffer.addVertex(0, 1, 0);

sharedBuffer.addVertex(1, 1, 0);

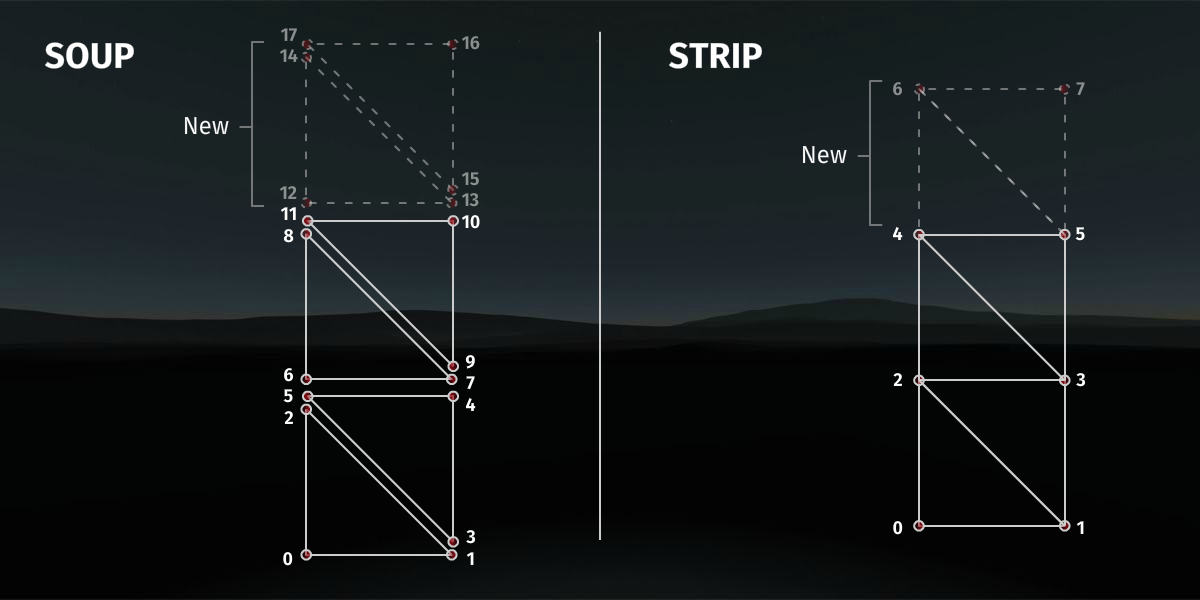

sharedBuffer.addUV(0, 0);Since we are storing several meshes without any connection, we’ll be using a single triangle soup. That means we’ll store three vertices for each triangle without sharing any of them. This will increase the bandwidth and memory requirements, as we’re storing (NUM_POINTS_PER_STROKE * 2 * 3) floats for each stroke.

In the case of the Balrog scene, using this method, our final buffer will have 406,782 floats.

Triangle strips

To reduce the size of our BufferGeometry attributes, we can switch from a triangle soup to triangle strips. Although using triangle strips can be challenging in some situations, using them when painting lines/ribbons is pretty straightforward: we just need to keep adding two new vertices at a time as the line grows.

Using triangle strips, we’ll drastically reduce the size of the position attribute array.

- Triangle soup:

NUMBER_OF_STROKE_POINTS* 2 (triangles) * 3 (vertices) * 3 (xyz) - Triangle strip:

NUMBER_OF_STROKE_POINTS* 2 (vertices)

As an example here are the position array sizes from the Balrog example:

- Triangle soup: 135,594.

- Triangle strip: 47,248.

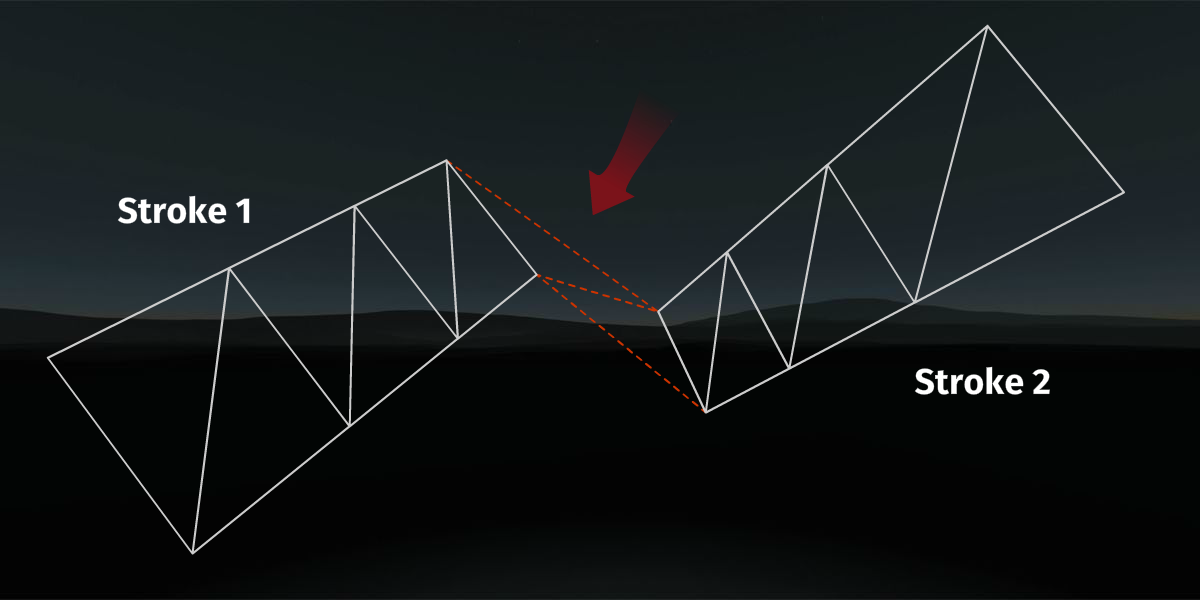

At first, this looks like an easy win, but if we look carefully, we will realize that we need to fix some issues. We are sharing the same buffer to draw several unconnected strokes, but every time we add a new vertex, it will create a new triangle using the two previous vertices, so all the strokes will be hideously connected:

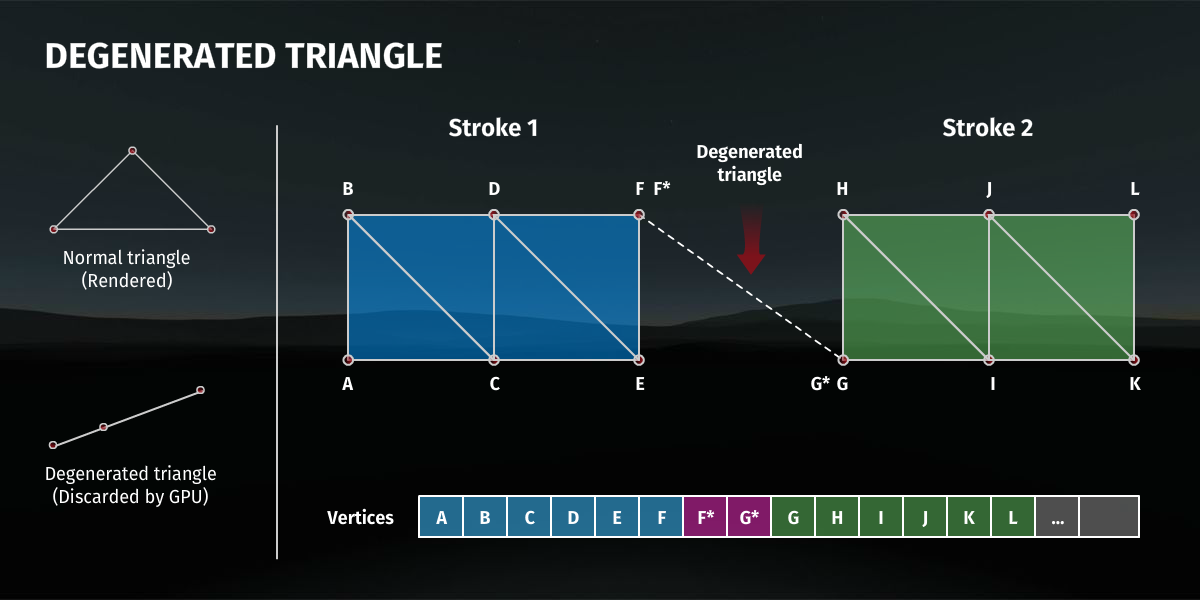

We need a way to tell WebGL to skip those triangles, and although WebGL doesn’t support primitive restart, we can create our own “primitive restart” by creating a degenerated triangle, which is a triangle with no area that is discarded by the GPU.

To create these degenerated triangles to separate two strokes, we will just duplicate the last vertex from the first stroke and duplicate the first vertex on the next stroke.

So, I created a restartPrimitive() function on the SharedBufferGeometry to duplicate the last vertex of the latest stroke. Once the first point of the next stroke is added, it has to be duplicated too.

The hardest part here is when working with indices, as we need to keep track of the degenerated vertices, so these position offsets have to be applied to the texture coordinates, colors, and normals, too.

You can take a look at the Line Brush to see the whole implementation.

Results

In the following table, we can see the statistics of the Balrog scene before and after the exposed optimizations:

Please note that these numbers also include common scene objects: floor, sky, controllers…

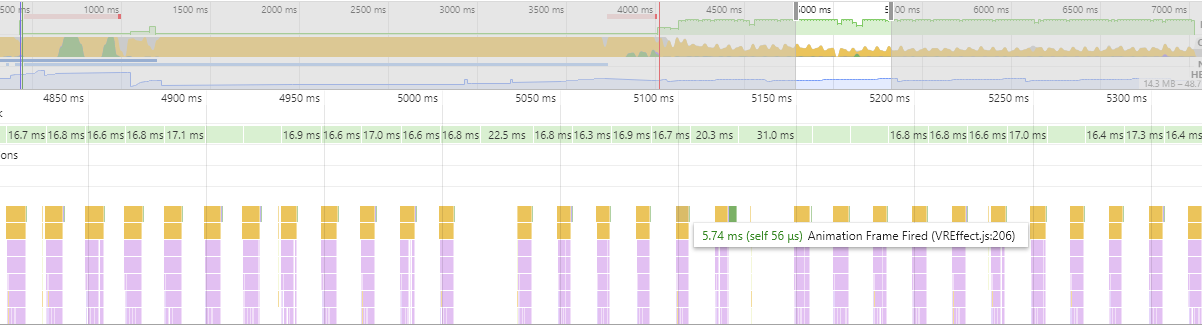

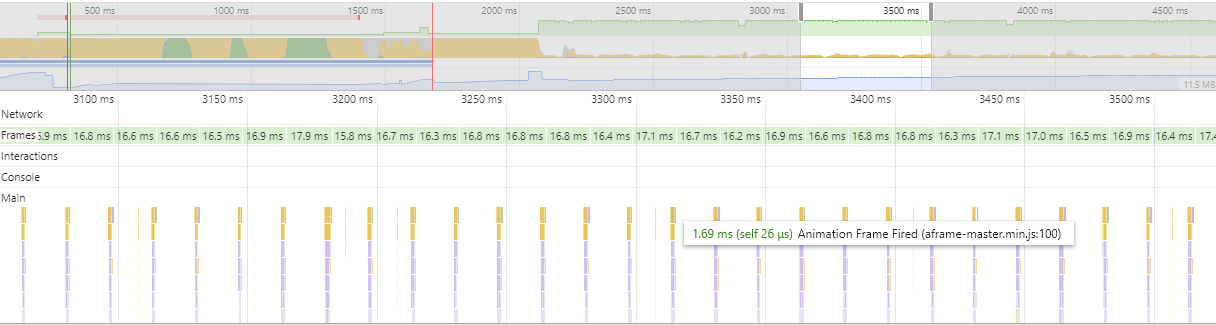

If we launch the devtools, we can see that we drastically reduced the animation frame time:

Before:

After:

Looking at the performance graphs, it’s visible how the GC was hit because of the allocations we did in the render loop. We’ve also considerably reduced CPU usage.

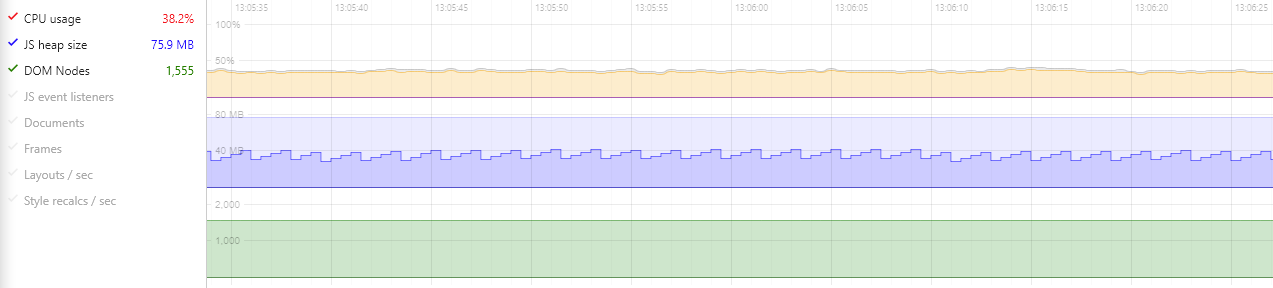

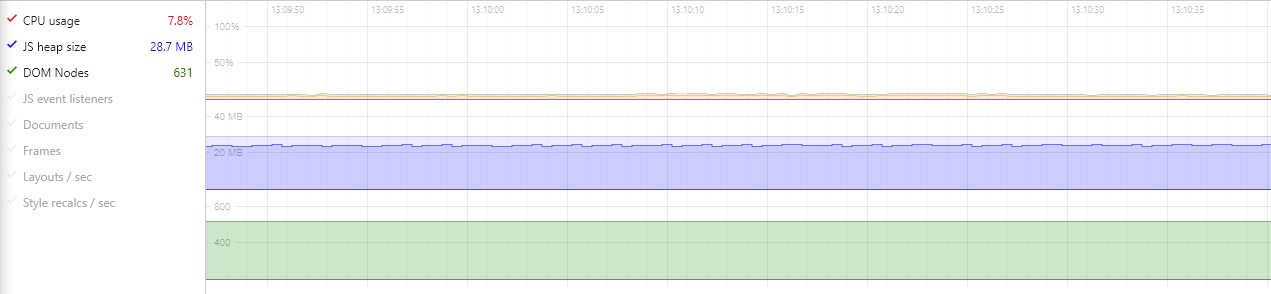

Before:

After:

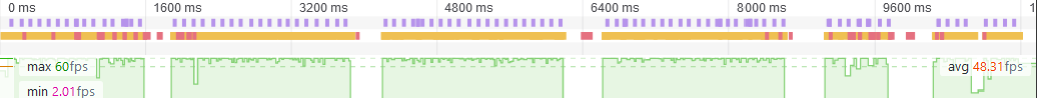

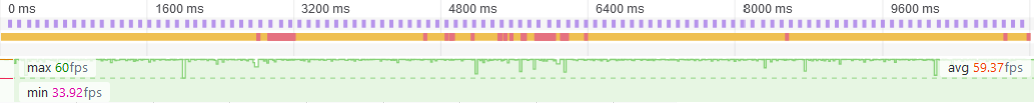

The performance differences are visible on both Chrome and Firefox. For example, the following is a graph of the latter, where the FPS drops to 2fps almost every two seconds.

Before:

After:

As demonstrated, the implemented optimizations have a major impact on A-Painter performance without adding too much complexity to the code. VR mode benefits the most. You can now paint for a long time without getting motion sickness: That’s a pretty good usability enhancement.

Further improvements

There are still many other optimizations that can further improve performance:

- Find an alternative for a bug (or feature) when computing the bounding box or sphere when using

BufferGeometrythat will always include the unused vertices from the array (origin vertices at (0,0,0)), so the frustum culling won’t be as effective as it should. - Use geometry instancing on brushes that paint spheres and cubes, so we don’t create new geometry on each stroke but instead reuse the previously created geometry.

- Use a LOD system to reduce the complexity of drawings based on distance. This could be useful if used in a big open environment like a social AR app.

- Also, in big open spaces, it could be useful to implement some kind of space partitioning (Octrees or BVH) to improve culling and interaction on each stroke.