Introduction

I was working on this demo with Diego Fernandez to celebrate the release of the WebXR API specification.

The demo was designed as a playground where you can try different experiences and interactions in VR, and introduce newcomers to the VR world and its special language in a smooth, easy and nice way.

When the demo is loaded you start in the main hall where you can find:

- Floating spheres containing 360º mono and stereo panoramas

- A pair of sticks that you can grab to play the xylophone

- A painting exhibition where paintings can be zoomed and inspected at will

- A wall where you can use a graffiti spray can to paint whatever you want

- A twitter feed panel where you can read tweets with hashtag #hellowebxr

- Three doors that will teleport you to other locations:

- A dark room to experience positional audio

- A room displaying a classical sculpture captured using photogrammetry

- The top of a building in a skyscrapers area to test your vertigo

Reception

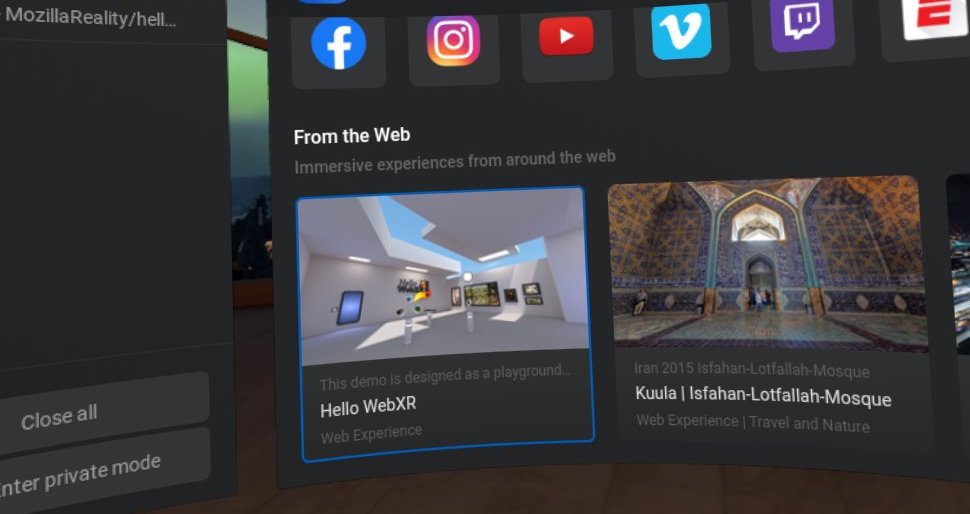

We were quite happy with the reception from the community to this demo, both in twitter and digital press (I specially liked this detailed article by David Heaney in UploadVR).

The demo has been featured for a while already in the three.js landing page and in the Oculus Browser suggestions:

Implementation

The demo was built using web technologies, using the three.js engine and our ECSY framework in some parts. We also used the latest standards such as glTF with Draco compression for models and Basis for textures. The models were created using Blender, and baked lighting is used throughout all the demo.

All the rooms live within the same application, I wish we had already the WebXR Navigation API landed so we could treat each room as a different WebXR application.

One of the harder pieces while implementing each interaction and room was to define how the controller will behave on each situation. For example, you could be painting a graffiti with the controller but you should still be able to teleport somewhere, if you were near the xylophone you should be able to grab them. As we were building the application with extensibility in mind, we didn't want to create dependencies between the different controllers and app's states so I introduced two concepts:

Ray control states

Each experience will define its own states for the controllers. That definition will be mainly a collider mesh, a priority order (in case of multiple states colliding at the same time) and multiple callbacks that will get called when the controller's ray enters, is hovering, or leave the colliding mesh, and whenever you press or release the primary button.

ctx.raycontrol.addState('stateName', {

colliderMesh: // Object3D Mesh,

order: // Number,

onHoverStart: (intersection) => {},

onHover: (intersection, active, controller) => {},

onHoverLeave: (intersection) => {},

onSelectStart: (intersection, controller) => {},

onSelectEnd: (intersection) => {}

});Then in the application state management, you could just enable or disable specific states depending on the room or experience that you are at.

ctx.raycontrol.activate('stateName');

ctx.raycontrol.deactivate('stateName');

ctx.raycontrol.deactivateAll();Area checker

To help to define context-based state I implemented an AreaChecker helper that will control if specific entities in the scene, like the controllers, are inside an area (defined as a bounding box) or not. I used this helper for example to change the controllers’ models when approaching the graffiti area and turn them into a color wheel and a spray can.

Defining an area is as easy as creating an entity, add a bounding box component and an Area tag component. Optionally you could add the DebugHelper component to see render a wireframe rectangle of the area.

let area = ctx.world.createEntity("area");

area

.addComponent(BoundingBox, {min: minPos, max: maxPos})

//.addComponent(DebugHelper)

.addComponent(Area);Implementing a reaction whenever you enter or leave an area is as simple as the following code, which enables or disable the color wheel model when entering or leaving the graffiti area in the main hall:

controllerEntity

.addComponent(AreaChecker)

.addComponent(AreaReactor, {

onEntering: area => {

if (area === "graffiti") {

controllerEntity.showColorWheel();

}

},

onExiting: area => {

if (area === "graffiti") {

controllerEntity.hideColorWheel();

}

}

});Graffiti

The graffiti experience was not part of the initial roadmap, but after trying the KingSpray graffity application on my Oculus Quest, I decided to give it a try and implement a proof of concept in WebXR. I turned out that it liked it more than I expected, even so I implemented A-Painter a while back, I found that, after the initial "wow" factor of painting in 3D, the graffiti seems more accessible for most users than trying to create something in 3D.

There are still many improvements that could be made to the graffiti experience. Related to performance, for example, I’m painting in a canvas and pushing the whole texture every time, and that’s expensive. An improvement there could be to just call texSubImage2D with the portion of the texture being updated (based on the brush size and laser position). An even better approach could be to avoid using canvas and paint directly in webgl that will also fix the issue with the premultiplied alpha when working with the canvas API.

I was thinking about even creating a bigger application just focused on the graffiti experience, with multiple users painting on the same graffiti, and store the paints in the cloud, as a big graffiti wall.