The WebGL multiview extension is already available in several browsers and 3D web engines and it could easily help to improve the performance on your WebXR application

What is multiview?

When VR first arrived, many engines supported stereo rendering by running all the render stages twice, one for each camera/eye. While it works it is highly inefficient.

for (eye in eyes)

renderScene(eye)Where renderScene will setup the viewport, shaders, and states every time is being called. This will double the cost of rendering every frame.

Later on, some optimizations started to appear in order to improve the performance and minimize the state changes.

for (object in scene)

for (eye in eyes)

renderObject(object, eye)Even if we reduce the number of state changes, by switching programs and grouping objects, the number of draw calls remains the same: two times the number of objects.

In order to minimize this bottleneck, the multiview extension was created. The TL;DR of this extension is: Using just one drawcall you can draw on multiple targets, reducing the overhead per view.

This is done by modifying your shader uniforms with the information for each view and accessing them with the gl_ViewID_OVR, similar to how the Instancing API works.

in vec4 inPos;

uniform mat4 u_viewMatrices[2];

void main() {

gl_Position = u_viewMatrices[gl_ViewID_OVR] * inPos;

}The resulting render loop with the multiview extension will look like:

for (object in scene)

setUniformsForBothEyes() // Left/Right camera matrices

renderObject(object)This extension can be used to improve multiple tasks as cascaded shadow maps, rendering cubemaps, rendering multiple viewports as in CAD software, although the most common use case is stereo rendering.

Stereo rendering is also our main target as this will improve the VR rendering path performance with just a few modifications in a 3D engine. Currently, most of the headsets have two views, but there are prototypes of headset with ultra-wide FOV using 4 views which is currently the maximum number of views supported by multiview.

Multiview in WebGL

Once the OpenGL OVR_multiview2 specification was created, the WebGL working group started to make a WebGL version of this API.

It’s been a while since our first experiment supporting multiview on servo and three.js. Back then it was quite a challenge to support WEBGL_multiview: it was based on opaque framebuffers and it was possible to use it with WebGL1 but the shaders need to be compiled with GLSL 3.0 support, which was only available on WebGL2, so some hacks on the servo side were needed in order to get it running.

At that time the WebVR spec had a proposal to support multiview but it was not approved.

Thanks to the work of the WebGL WG, the multiview situation has improved a lot in the last few months. The specification is already in the Community Approved status, which means that browsers could ship it enabled by default (As we do on Firefox desktop 70 and Firefox Reality 1.4)

Some important restrictions of the final specification to notice:

- It only supports

WebGL2contexts, as it needsGLSL 3.00andtexture arrays. - Currently there is no way to use multiview to render to a multisampled backbuffer, so you should create contexts with

antialias: false. (The WebGL WG is working on a solution for this)

Web engines with multiview support

We have been working for a while on adding multiview support to three.js (PR). Currently it is possible to get the benefits of multiview automatically as long as the extension is available and you define a WebGL2 context without antialias:

var context = canvas.getContext( 'webgl2', { antialias: false } );

renderer = new THREE.WebGLRenderer( { canvas: canvas, context: context } );You can see a three.js example using multiview here (source code).

A-Frame is based on three.js so they should get multiview support as soon as they update to the latest release.

Babylon.js has had support for OVR_multiview2 already for a while (more info).

For details on how to use multiview directly without using any third party engine, you could take a look at the three.js implementation, see the specification’ sample code or read this tutorial by Oculus.

Browsers with multiview support

The extension was just approved by the Community recently so we expect to see all the major browsers adding support for it by default soon

- Firefox Desktop: Firefox 71 will support multiview enabled by default. In the meantime, you can test it on Firefox Nightly by enabling draft extensions.

- Firefox Reality: It’s already enabled by default since version 1.3.

- Oculus Browser: It’s implemented but disabled by default, you must enable

Draft WebGL Extensionpreference in order to use it. - Chrome: You can use it on Chrome Canary for Windows by running it with the following command line parameters:

--use-cmd-decoder=passthrough --enable-webgl-draft-extensions

Performance improvements

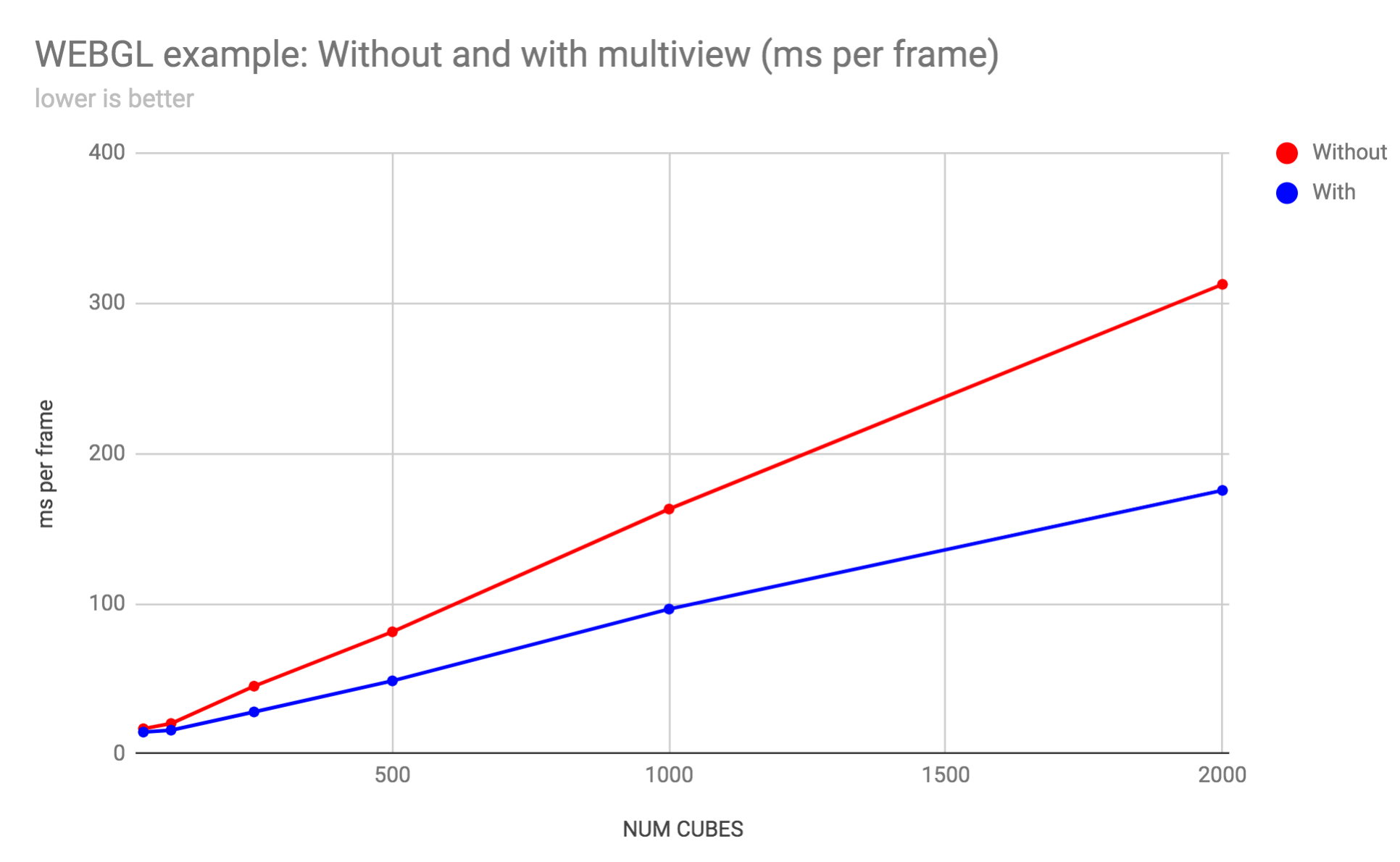

Most WebGL or WebXR applications are CPU bound, the more objects you have on the scene the more draw calls you will submit to the GPU. In our benchmarks for stereo rendering with two views, we got a consistent improvement of ~40% compared to traditional rendering.

As you can see on the following chart, the more cubes (drawcalls) you have to render, the better the performance will be.

What’s next?

The main drawback when using the current multiview extension is that there is no way to render to a multisampling backbuffer. In order to use it with WebXR you should set antialias: false when creating the context. However this is something the WebGL WG is working on.

As soon as they come up with a proposal and is implemented by the browsers, 3D engines should support it automatically. Hopefully, we will see new extensions arriving to the WebGL and WebXR ecosystem to improve the performance and quality of the rendering, such as the ones exposed by Nvidia VRWorks (eg: Variable Rate Shading and Lens Matched Shading).

References

- https://developer.nvidia.com/vrworks/graphics/multiview

- https://developer.oculus.com/documentation/mobilesdk/latest/concepts/mobile-multiview/

- https://www.khronos.org/registry/OpenGL/extensions/OVR/OVR_multiview2.txt

- https://community.arm.com/developer/tools-software/graphics/b/blog/posts/optimizing-virtual-reality-understanding-multiview

- https://arm-software.github.io/opengl-es-sdk-for-android/multiview.html

- https://github.com/KhronosGroup/WebGL/issues/2912

- https://developer.oculus.com/documentation/oculus-browser/latest/concepts/browser-multiview/

* Header image by Nvidia VRWorks