Introduction

I have been using and collaborating with three.js for quite a while, and I really love the library itself and the ecosystem and community around it.

I always like to remember the first time I learnt about the library, after a talk with Ricardo Cabello I built an animated spikeball as I explained in this old post:

WebXR hands

Oculus announced that they were releasing experimental support for the Hand Tracking API and It happened that I was on vacations, so I decided to invest some time in testing around the API and add support for three.js

It was pretty straightforward to get it working on three.js, the major pain was to create an API following the same approach as the existing WebXR controller module.

Then I added a pinch detector so you could use it the same way the selectstart/selectend works with the physical controllers:

But all the joy turned to sorrow when I tried to use a skinned mesh model:

The way the API was returning the joints was confusing and it was not working the way three.js was expected to work. It also didn't help that the mesh model I was using was broken. But after few hours I found the fix to the problem and here we have our hand mesh models!

I submitted a PR to three.js to use different profiles: geometry based (boxes and cubes), and mesh models like a high poly version from Oculus and a low poly modification by myself:

With the API in place, it was time to work on some fun experiments, like this watch showing the FPS stats on the scene:

Or this wip piano demo:

WebXR controller haptics

When Firefox Reality and Oculus Browser implemented the haptics API I worked on an example where you can get different vibration intensity (and sound) based on which bar you hit.

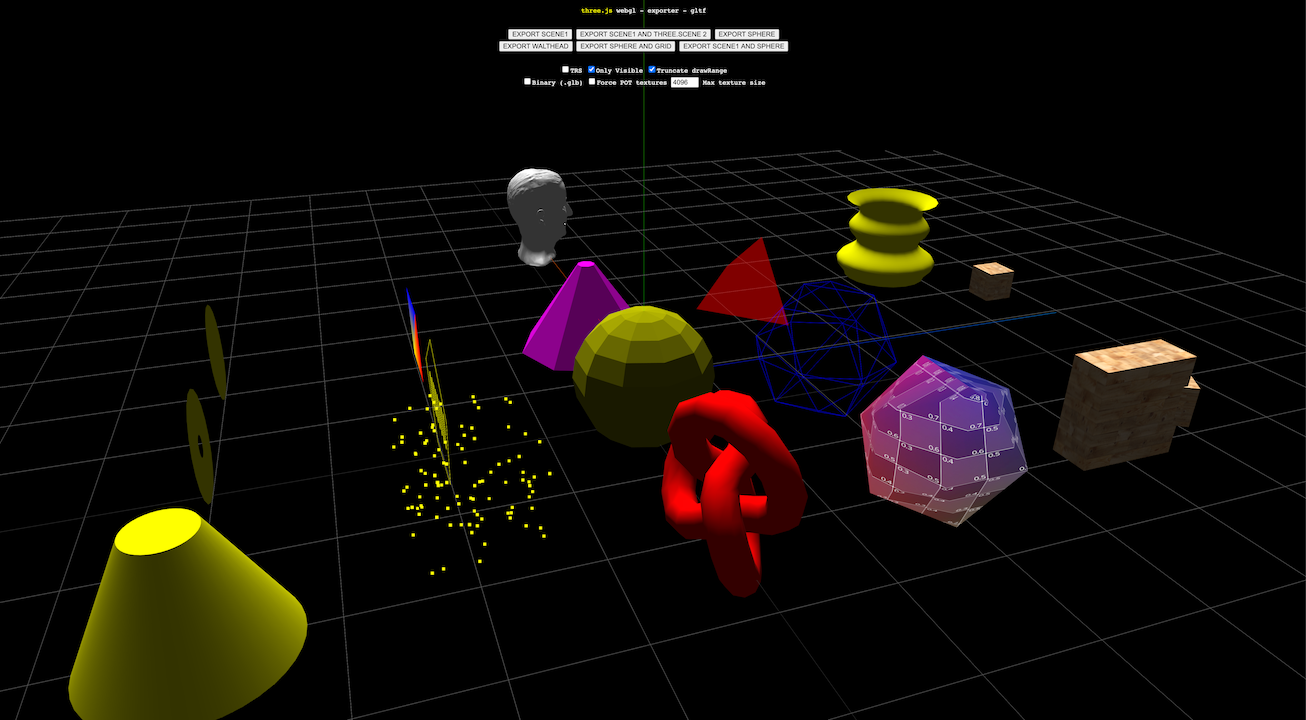

glTF Exporter

While working on demos like A-Painter I needed a export format somehow more advanced than plain OBJ, so I decided to implement a glTFExporter class for three.js and A-Frame (Read the article about the release).

I worked on generate a valid output so you could be using the exported gltf into third party applications without getting any error. For example, at that time, Facebook added support for glTF, so I worked with its validator to ensure our gltf were valid.

I also included the Export GLTF option on the three.js editor.

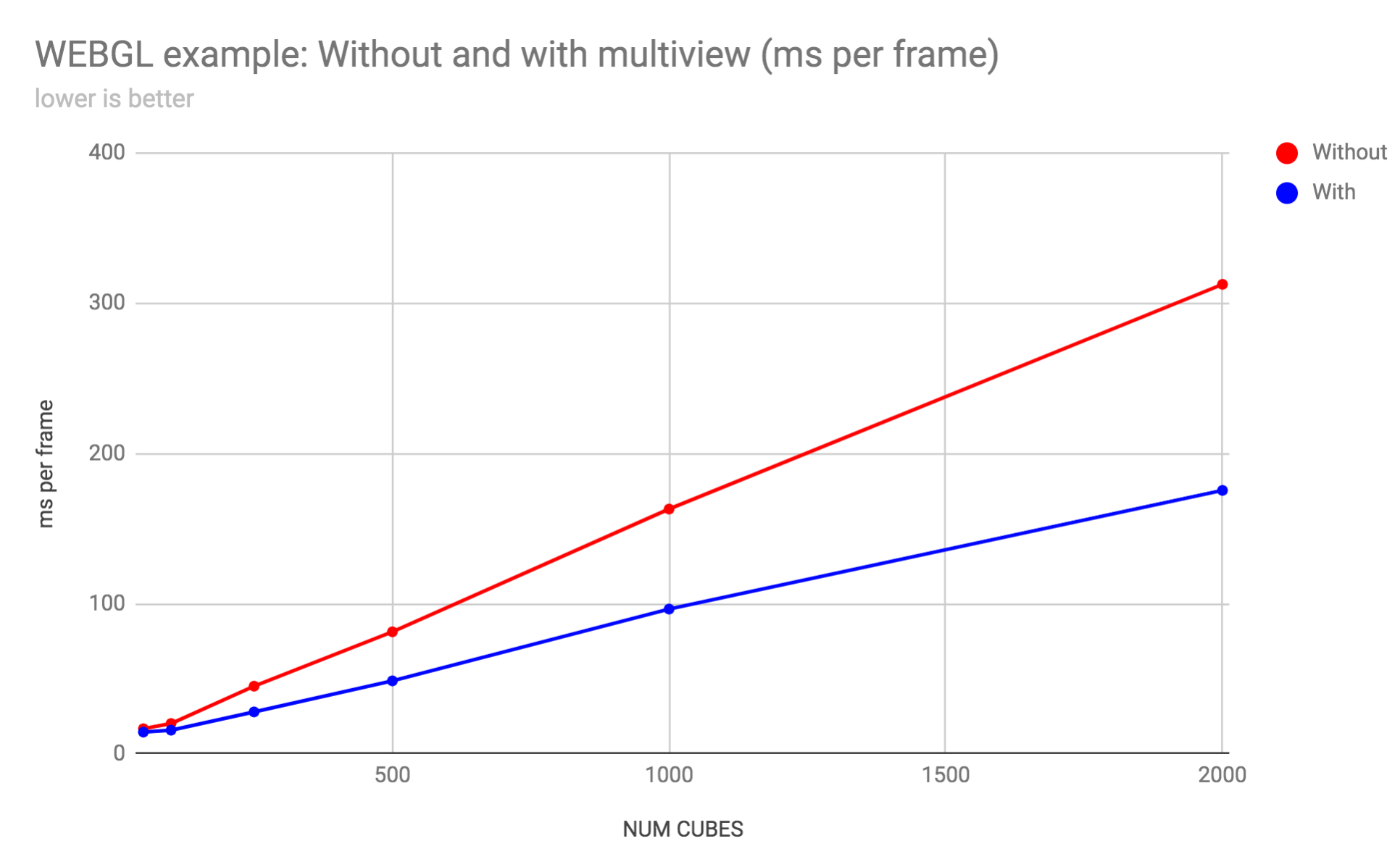

Multiview

I was working with our Firefox Reality team to add support for the OVR_multiview2 WebGL extension.

I built a raw WebGL demo to test the performance benefits in our browser and we got a really nice improvements: around 40% for two views.

The result is even more impressive if we are rendering more views. Currently most browsers will return 4 as the maximum number of views, so I created one example with four viewports. The results were an improvement in render time from ~47ms to 17ms.

After testing it in all the browsers supporting the extension, I pushed a PR to three.js including an example showing an improvement from ~8ms down to ~4ms.

https://threejs.org/examples/misc_exporter_gltf.html

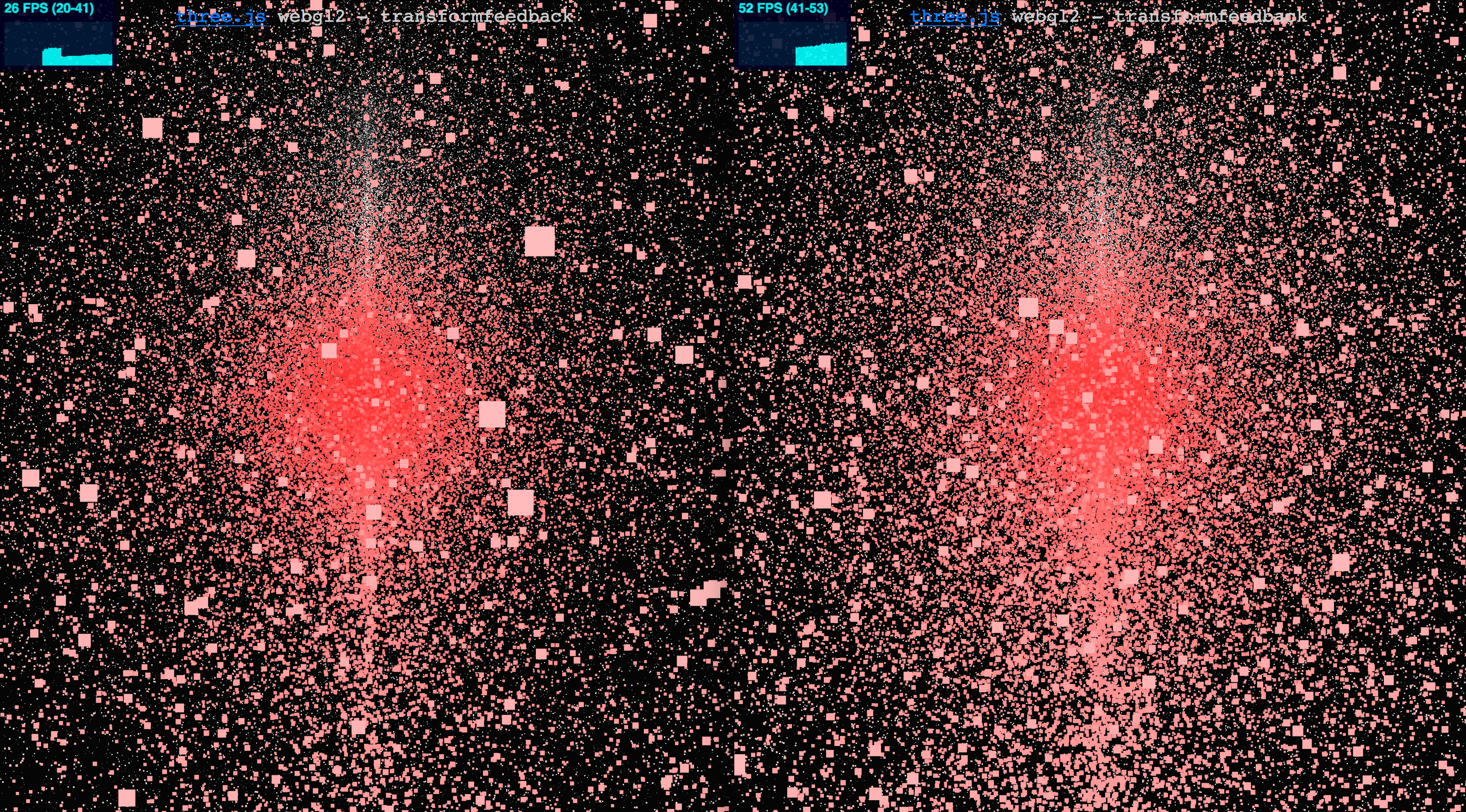

Transform feedback

I was working on a PR to add support for WebGLTransformFeedback on the WebGLRenderer for WebGL2.

This extension lets you do transforms on the GPU using the previous computed value without need to go back and forth from CPU to the GPU. This will help improve performance in experiences that needs a lot of transformation per frame for example when working with a lot of particles like in the following example:

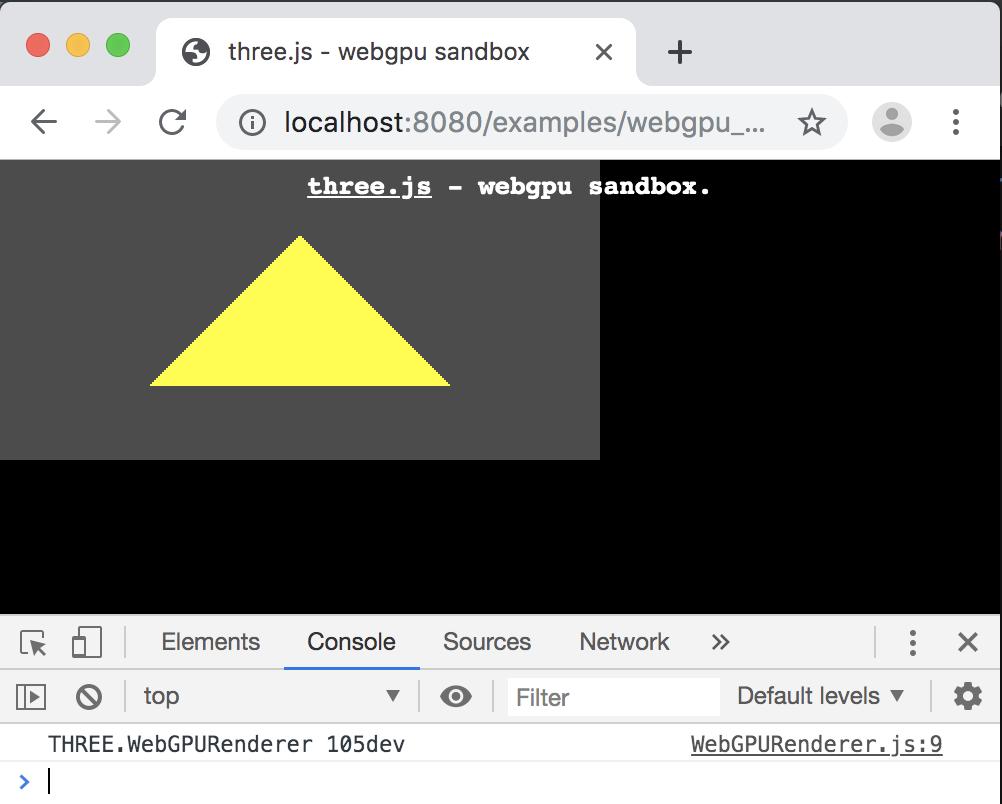

WebGPU

WebGPU is going to be a major upgrade for the graphics on the web ecosystem. It provides a modern API, which is, thanksfully, way less verbose than DX12 or Vulkan, but still powerful.

It can be also used not just for rendering but also for computing, using compute shaders.

I started a WebGPURenderer prototype in three.js, just to get an idea of how many pieces of code we should be updating while keeping the same API layer.

The current status it not that impressive so far, but the underlying implemention seems promising and I expect in the incoming months we will start adding pieces to build this renderer in the main three.js repository.

Modify default shaders from three.js

Example showing a proof of concept implementation on how to modify the shaders from the default materials by injection pieces of code into them.

Buffergeometry drawcalls example

Example showing how to use drawcalls to dynamically modify the number of lines to draw.

Postprocessing effect: crossfade

Crossfade effect used to create a smooth transition between two scenes.

Spikeball

My very first experiment with an early version of three.js back in the 2010, in fact this example it's using the canvas renderer as webgl wasn't widely available. You can check the post I wrote about it.