Introduction

It does not matter if you are building a game, a real-time framework or a browser engine there are some common issues you have to deal with, like how to check regressions and how to run benchmark and tests on your graphics code.

Most, if not all, of the javascript 3D engines like A-Frame or three.js, just manually test before every release that nothing broke. As you can imagine that could help if there are some obvious performance or rendering issues but for trickier changes or bugs, it won't.

Of course you could always run the profiler to check the performance, but each browser provide its own custom solution and they are really not user friendly and they are also really hard to automate across different browsers and platforms. Specifially if you are working with standalone devices.

So I started working on a tool to improve this workflow. The basic idea is really simple: we just want to be able to execute an application or a test for a specific number of frame and measure how long it takes. So if you change the code in that application and it runs slower, you know that you got a regression in performance, if some frames differ from each others, you know you got a regression in the renderer.

The main point here is to be able to run them deterministically, and without modifying any of the actual code of the application.

So the complete list of requirements that I had to design the application were:

- Ability to execute any application deterministically without modifications

- Compare performance but also rendered frames

- Multiplatform: desktop and standalone devices such as XR headsets

- Support any browser: Chrome, Firefox, Firefox Reality, Safari, Oculus Browser, ...

- Command line tool (easy to automate)

- Results in a format easy to use for analysis

At the time I was working on this prototype I happened to meet Jukka Jylänki (formerly Mozilla Games team, currently Unity), who was working in a pretty much same approach as I was planning to do: emunittest.

It was really useful to discuss ideas with him and to have access to his code, as my implementation started heavily based on his codebase. Although I slowly started diverging from his needs (focused by that time mainly in emscripten), for example, I got rid of all the python code in favor of node to keep the whole app in javascript, and I removed the need to modify the demos’ HTML so all the code is automatically injected by a proxy server.

Features

The overall list of features currently supported by the application cover the requirements described in the previous section:

- Command line tool: It starts a proxy server that will inject a custom code into every application that you want to run.

- Ensure determinism: The code injected in every test hooks every non-deterministic API calls:

requestAnimationFrame,Performance.now,Math.random,Date.now, and many moreWebXR: locked headeset and controllers pose

- Input record and replay: It lets the user to record and then replay input from the mouse and the keyboard (VR Controller recording in progress).

- Fake WebGL and WebAudio API: You can run your tests using a fake

WebGLandWebAudioAPI, which are basically NOP-functions that do not break your application but let you measure the impact of your code without the overhead of these APIs. - It generates a JSON or a HTML file with all the statistics.

Reports

The tool will generate a JSON with all the data collected during the benchmarks/tests. On each JSON you will find the following format for each test executed.

{

"test_id": "webxr-samples-fbo-scaling",

"stats": {

"perf": {

"fps": {

"min": 5.829544129649062,

"max": 41.42502071251032,

"avg": 35.470410612789095,

"standard_deviation": 4.577646000339063

},

"dt": {

"min": 3.5399999999999636,

"max": 259.1999999999998,

"avg": 28.398958958958957,

"standard_deviation": 10.209202061083992

},

"cpu": {

"min": 2.6250772081532516,

"max": 62.99559471364968,

"avg": 12.883571689380563,

"standard_deviation": 4.725107533597718

}

},

"webgl": {

"drawCalls": {

"min": 6,

"max": 54,

"avg": 53.42399999999993,

"standard_deviation": 4.6428680791080055

},

"useProgramCalls": {

"min": 2,

"max": 7,

"avg": 6.957999999999998,

"standard_deviation": 0.37180102205346277

},

"faces": {

"min": 24208,

"max": 38052,

"avg": 37868.35000000008,

"standard_deviation": 1550.9642399165666

},

"vertices": {

"min": 72624,

"max": 114156,

"avg": 113605.04999999987,

"standard_deviation": 4652.892719749728

},

"bindTextures": {

"min": 2,

"max": 27,

"avg": 26.673000000000002,

"standard_deviation": 2.769850356968763

}

},

"oculus_vrapi": {

"fps": {

"min": 19,

"max": 73,

"avg": 40.733333333333334,

"standard_deviation": 12.865803079827115

},

"tear": {

"min": 0,

"max": 0,

"avg": 0,

"standard_deviation": 0

},

"early": {

"min": 0,

"max": 0,

"avg": 0,

"standard_deviation": 0

},

"stale": {

"min": 0,

"max": 72,

"avg": 64.30000000000001,

"standard_deviation": 21.601157376399993

},

"mem": {

"min": 1295,

"max": 1804,

"avg": 1778.7,

"standard_deviation": 100.39560083323701

},

"app": {

"min": 1.16,

"max": 3.61,

"avg": 1.7003333333333335,

"standard_deviation": 0.635900848315906

}

}

},

"autoEnterXR": {

"requested": true,

"successful": false

},

"revision": 0,

"webaudio": {

"numAudioBuffers": 0,

"totalDuration": 0,

"totalLength": 0,

"totalDecodeTime": 0

},

"numFrames": 1000,

"totalTime": 30782.2,

"timeToFirstFrame": 2370.28,

"numStutterEvents": 34,

"totalRenderTime": 28411.920000000002,

"cpuTime": 3723.1999999999966,

"avgCpuTime": 3.7231999999999967,

"cpuIdleTime": 24647.360000000004,

"cpuIdlePerc": 86.7500682812003,

"pageLoadTime": 2255.54,

"result": "pass",

"logs": {

"errors": [],

"warnings": [],

"catchErrors": []

},

"testUUID": "1",

"browser": {

"name": "Firefox Reality Dev",

"code": "fxrd",

"versionCode": "1",

"versionName": "11"

},

"device": {

"name": "Quest",

"deviceProduct": "Quest",

"serial": "<SERIAL>"

}

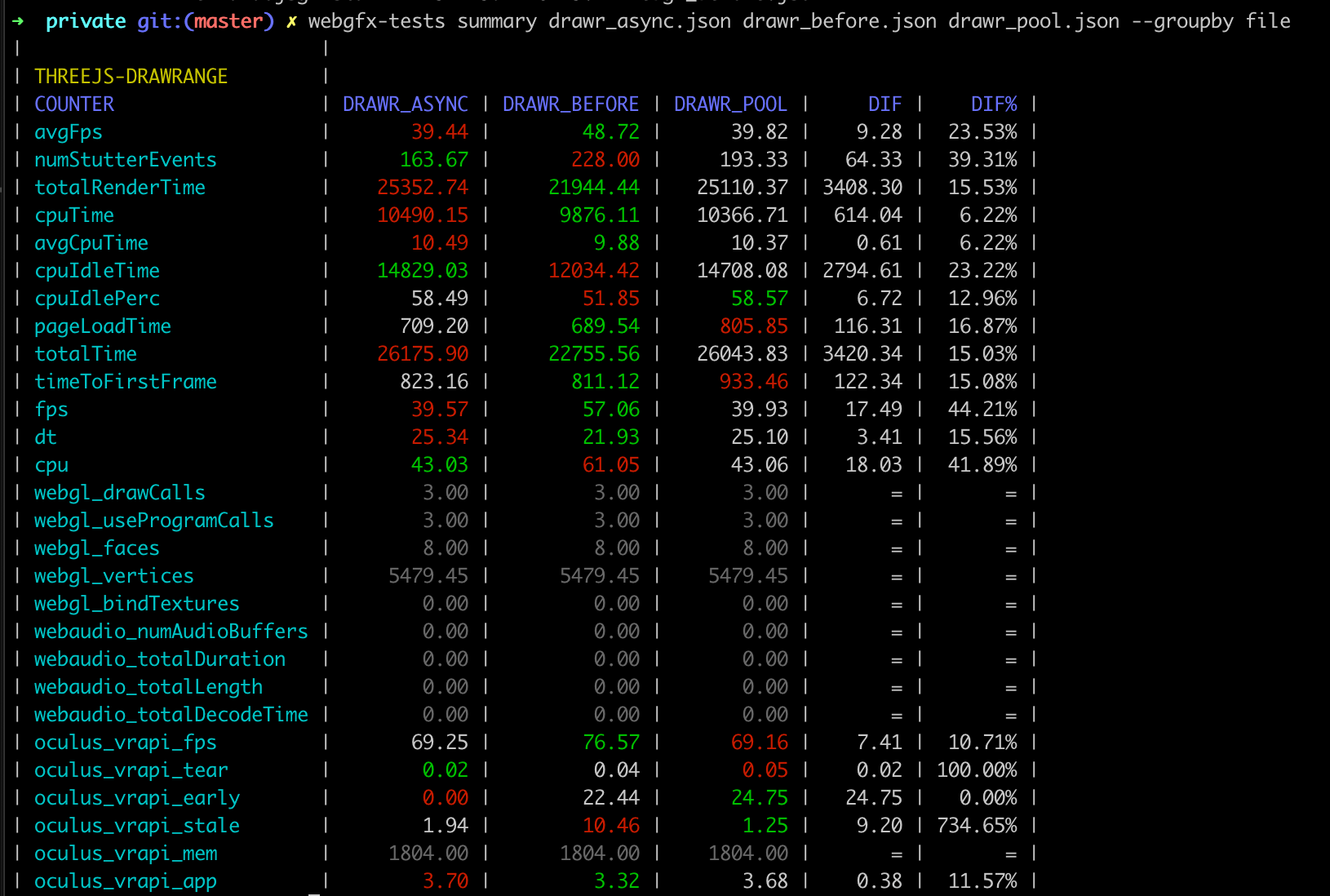

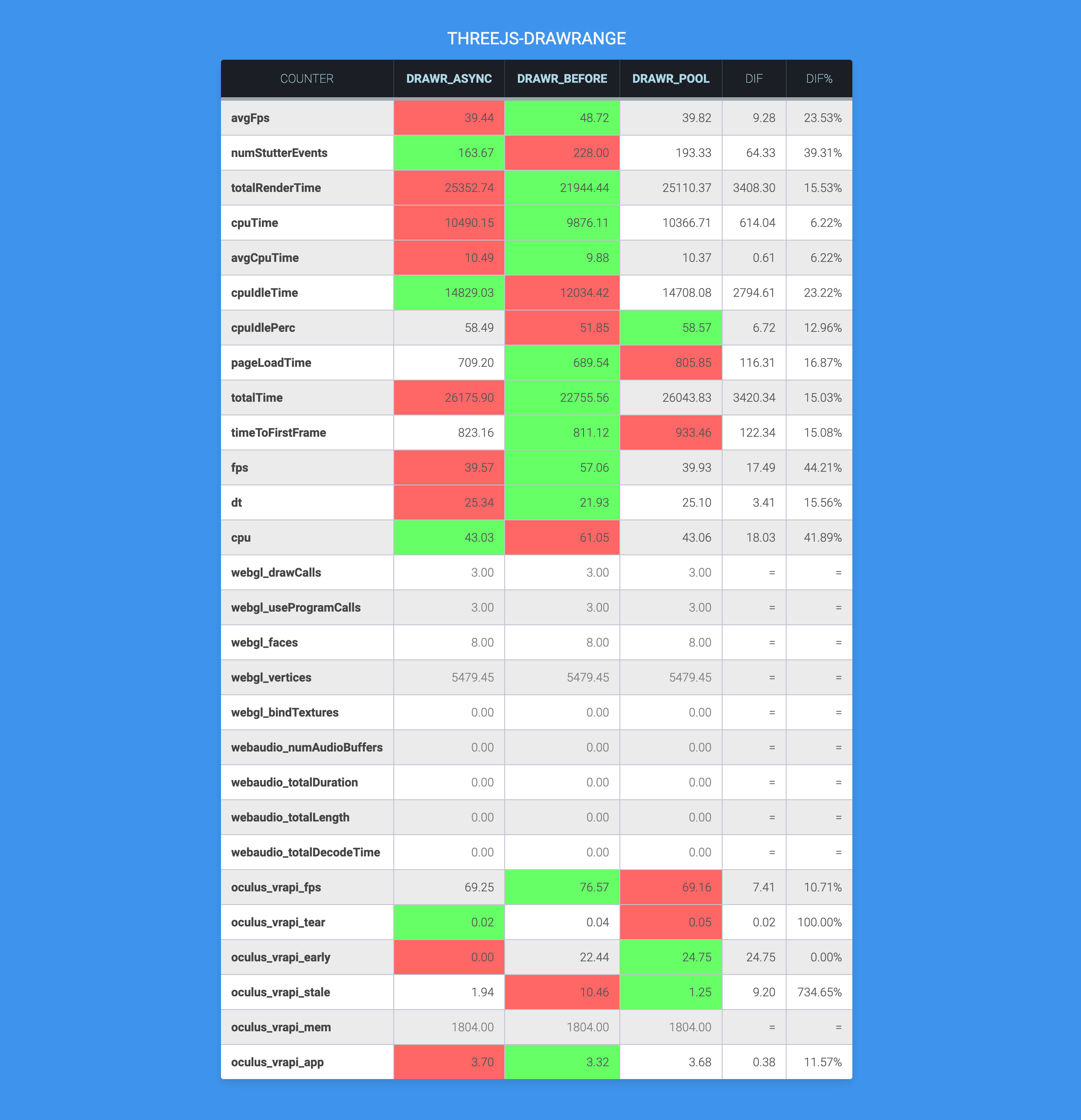

}It also provides an option to generate a report using multiple JSON files, grouping them for example by device, browser or file.

The tool had the option also to publish an HTML, easier to share and publish.

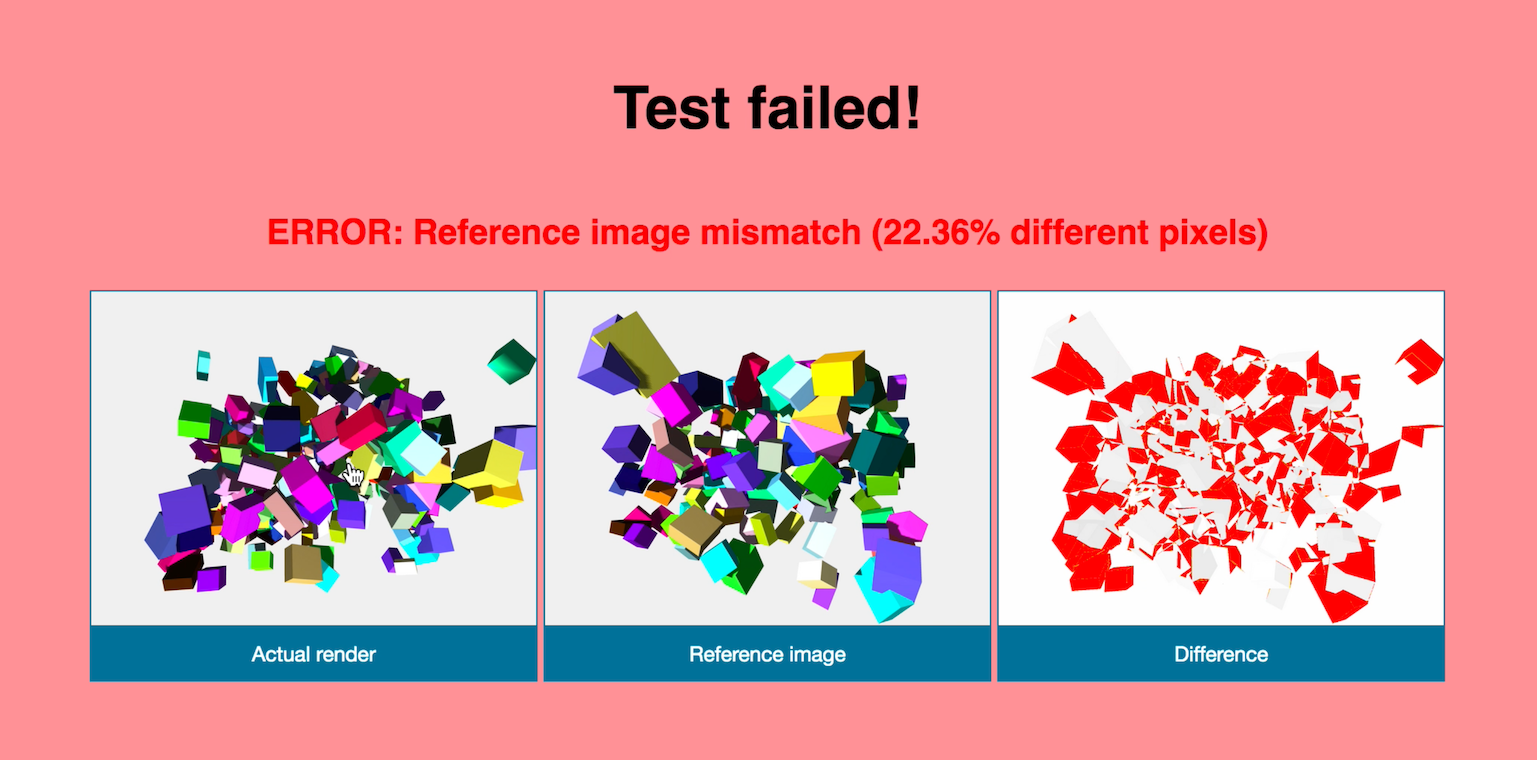

Reference image test

By default, the tool will compare the last frame with a reference image, and using a specific threshold that could be defined by test. But it could be possible to compare any other given frame number during the test execution.

Demo

Multiple devices connected to the same computer

The following video shows a demo running tests in three VR standalone devices connected to my laptop: Oculus Quest, Pico Neo2 and Pico G2.

Recording

One important feature to test interactive applications is the ability to record the user's input.

So I included the possibility to run the demo in record mode so it will be recording, per frame, every user input (mouse and keyboard, VR coming soon).

Once the recording mode ends you can decide if you want to use the recorded input and reference image for your next tests.

When replaying the input, I added also support for showing the key pressed for debugging (Using a library I created):

I did the same for the mouse recording, so you can see the cursor and also a circle indicating when the user clicks:

WebXR Support

I worked with the Firefox Reality team to include a parameter when launching the browser activity (Using ADB) to disable the user gesture requirement to enter VR. With that flag enabled, I forced entering VR so we could test the VR mode without any user interaction.

A major issue we had is that depending on the placement of your controllers when running the demo, they could be close to the camera, affecting the rendering, or raycasting some object, affecting the overall performance.

To fix this, I hooked the WebXR API to return an specific controller's pose, ignoring the real controller's pose.

I could make the controllers dissapear, move around programatically like you can see in the next video, or replaying a recorded movements.

I took a similar approach for the headset itself, ignoring the VR pose provided by the API and injecting my own custom one.

Results and devices database

I was working on a database (MongoDB) to store all the information from the devices, browsers and tests results for every team member and devices we own. So we could easly check regressions in the project where we use this.